Documentation the way it ought to be.

The End of the Line

Andy Johnson-Laird Monday January 27, 2014

We have had digital computers on Planet Earth since, oh, 1945, and, more or less from day one (well, it would have been day zero, but that's a computer geek joke), there has been a debate on how to represent the end of a line of text.

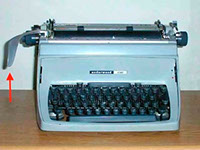

In the early days of typewriters (remember those?) you grabbed a large chromium plated lever and hauled on it, pushing it over to your right. Except, of course, in those countries where they write from right to left. They had to reef on the lever from right to left. I'm really not sure what they did in Japan, China, and Korea when they wanted to type from top to bottom, perhaps rotate the paper 90 degrees?

But anyway, this large lever, and the action associated from heaving on it, was called a carriage return because, err, well, I don't know how to break this to you, it returned the typewriter carriage to the left (at least in the West) for you to start a new line. Note how I slipped "new line" into that sentence. Hold that thought. You'll see why.

In fact, the aforesaid carriage return lever both returns the carriage back to the start of a new line, but cranked the platen (the rubber roller behind the piece of paper), the requisite distance up, so that you could avoid overtyping the line you just finished.

In sum, the carriage return lever did both a carriage return and a line feed.

Thus it was pretty obvious that the name for the end of a line of text stored inside a computer would best be called something like "end of line" or "carriage return" or "line feed" and not some fancy-schmancy neologism like "conterminous adjacent text character terminator thingy."

Now, as the computer industry was still in its infancy and had yet to move into its adultery, we in the priesthood, as such we were, used all three terms interchangeably. We all knew what we meant -- and if it confused the plebs, that meant (a) we had job security, (b) the confusion was their problem not ours, and (c) that's what they deserved if they couldn't bother to learn binary.

Digital computers use zeros and ones to represent information. See! You knew there was a reason for calling them digital computers, didn't you? In fact that's all they can use. But back in the Really Dim Times, there were stories about how the First Computers only had zeros as ones had not yet been invented. These zero-based computers were, as you might expect, totally useless. The computer industry paused and held its breath until someone did invent the ones before we could move ahead.

For my part this was A Great Leap Forward (and unlike Mao Zedong's didn't involve massive loss of life). I knew I wanted to be a computer geek back in 1963 because it meant I could work indoors, command huge multi-million dollar machines to do my bidding, and would never have to worry about personal hygiene and wimmin.

However there was still great wailing and gnashing of teeth and considerable, well, let's in the interest of good taste, refer to it merely as mass debating, over this question: Given that you have say, six or perhaps eight binary digits (zeros or ones), what particular combination would you use to represent, oh, I don't know, the letter "A?"

There were several encoding standards, factions, and zealots. There was the European Computer Manufacturer's Association, with the unfortunate acronym, ECMA, which sounded like a skin condition, and the American Standard for Computer Information Interchanges. At least ASCII didn't sound too dodgy. And there was also Control Data Corporation (R.I.P. -- One of my former employers) who adopted a bastardized version of ASCII that we all called "half-ASCII" because it really was half-assed. There was also something that IBM promulgated called EBCDIC, Extended Binary Coded Decimal Interchange Code. It was pronounced ebb-see-dick, which pretty much described how non-IBM people felt about the designer.

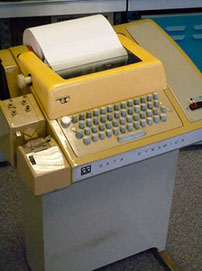

But everyone seemed to agree that there were several functions that the computer console had to regardless of whether said console was a Telex teleprinter, a Friden Flexowriter, or, later on, an IBM Selectric typewriter. And these functions included Carriage Return, and Line Feed. Good. Glad we're all agreed on that then, chaps.

As time wore on, ECMA was cured (or people forgot they had it), that total EBCDIC was voted off the island, and The World basically conceded that ASCII was The One True Encoding, and there were specific combinations of zeros and ones that represented the Carriage Return Character ("CR", 00001101 if you must know) and Line Feed ("LF," 00001010).

These characters had very specific meanings still. Carriage Return meant "go back to the left, dude" (presuming you were in a country that wrote left to right), and Line Feed meant "go down to the next line." Everything was logical.

But then, as the French probably would never say, la merde se battre contre la ventilateur. The shit hit the fan. Because, as time went by, different operating systems emerged from the primordial slime. One was known as UNIX® (because it was the progeny of MULTICS, silly...) and the unwashed gurus of UNIX decreed that the end of a line of text shall be terminated with only the Line Feed character Because This Made Sense. Then there was the operating system for microcomputers (circa 1972). CP/M used a Carriage Return followed by a Line Feed character to mark the end of a line. That tradition was carried forward into QDOS (Quick and Dirty Operating System) that Bill Gates purchased and turned into MS-DOS. Microsoft Windows, to this day, uses the Carriage Return and Line Feed to mark the end of a line of text.

Oh, there was also the BBC Acorn and some other minor players that use Line Feed followed by Carriage Return which was perverse in the extreme but fortunately nobody cared.

But Steve Jobs not only exhorted us to Think Different, Apple also wanted to Be Different. So the Classic Macs used just a Carriage Return all by its lonesome self for end of line. Apple then realized it had to Be Really Different, and subsequently changed to use just a Line Feed when Apple's user-friendly operating system was turned into a facade for Darwin, Apple's user vicious and underlying operating system for OS X.

Which brings us to today.

I wasted most of today screwing around trying to get program to run on an Apple Macintosh Pro under OS X that would read a text file created by the flight controller of an unmanned airborne vehicle and output a converted text file that could be processed by a program running on a computer using Microsoft Windows.

So here we are, 68 years after the birth of computing, and I'm still having to play silly buggers wondering why a program running under Windows (CR/LF) cannot handle the output text file created on a Mac (LF) reading in a text file created by a drone's flight controller (CR).

Why was this so hard?

Well, here is the slightly geeky explanation. Don't worry. I'll hold your hand.

Start with the notion that TextWrangler, a free (and otherwise superb) text editor for the Mac has feature (ok, ok, it's called a File -> Hex Dump Front Document), that shows you what's in a text document, end of line terminators included.

But there is zee leetle catch. It shows you how the document's lines are terminated when the document has been loaded into the computer's memory -- and in this form the lines are always terminated with Carriage Return, regardless of how they are actually terminated out on the computer's disk. The TextWrangler's programmers dump out of the contents in a form that purports to show the file's contents, but it's not really the file contents? No shit, Sherlock! You really did that deliberately? Yup. They did. You must use the "File -> Hex Dump File" menu option to display the file's contents as it really is. So TextWrangler misrepresents the contents of a file when it does a hex dump of an open file. Who'da thunk it?

Add to that, if you use TextWrangler to create a text file on a Mac, out on disk the file has Line Feed terminators. But if you send that text file to someone as an email attachment (as I did) and that Someone happens to run Microsoft Outlook for their email on a Mac, when that Someone saves the attachment to their computer's hard disk, guess what? Outlook converts the end of line characters from Line Feeds to Carriage Returns. And that messes things up Big Time if that text file happens to be a program written in a programming language called gawk. When the gawk program interpreter tries to read in the text document it looks like just one huge long text line, 5,000 characters long and that confuses the hell out of it. The program doesn't work, but worse yet, it doesn't even fail. No error message. Nothing. Zip. Nada. It just doesn't work. So Someone kept calling and emailing me and saying, "Nope. Doesn't work here" and I kept responding, "Yup. It does work here." A Mexican standoff involving two guys, two computers, and two computer programs.

So several hours later, a few of which involved using TeamViewer so I could see what was on Someone's computer screen and actually drive their computer even though they were 1,000 miles away, I finally figured out what was going on. It all came down to those bloody end of line characters.

It's enough to drive one crazy. It probably has. But at least my wife knows why I'm at the end of my line.

Ye ancient typewriter of yore with ye carriage return lever.

Public domain image, wikimedia.org.

The first computer consoles were teletype writers -- and even today computer use the abbreviation TTY when no TTY has been seen for decades.

Image courtesy of AlisonW, wikimedia.org

Copyright © 2020 Johnson-Laird Inc.